5/07/2018

Setup Spark Standalone Mode HA Cluster With Shell Script

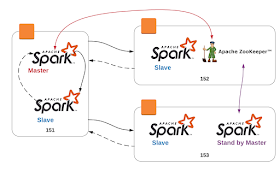

From previous post [1], we have showed, how to setup standalone mode spark cluster and now we are going to improve the architecture by adding stand by master to achieve high availability (fault tolerance) of master role.

[1] http://dhanuka84.blogspot.com/2018/05/setup-spark-standalone-mode-cluster.html

Setup Zookeeper

1. I am using Zookeeper (ZK) version 3.4.12 . Download & extract ZK from site [1].

[1] http://www-eu.apache.org/dist/zookeeper/stable/

2. Go to ZK folder and create configuration file

zookeeper-3.4.12]$ cp conf/zoo_sample.cfg conf/zoo.cfg

3. Edit zoo.cfg file

dataDir=/home/dhanuka/zookeeper-3.4.12/data

4. Start ZK

zookeeper-3.4.12]$ ./bin/zkServer.sh start

Spark Standalone Mode Zookeeper configuration

1. $SPARK_HOME/conf/spark-env.sh HA configuration

SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=10.163.134.152:2181 -Dspark.deploy.zookeeper.dir=/spark"

2. Same configuration will be applied in stand by master node (153).

3. Slaves will be pointing to both masters and primary master will be boot-up first.

4. Full script to boot-up both masters and slaves

Script

cp $SPARK_HOME/conf/spark-env.sh.template $SPARK_HOME/conf/spark-env.sh

cp $SPARK_HOME/conf/spark-env.sh.template $SPARK_HOME/conf/spark-second-env.sh

sed -i '$ a\SPARK_MASTER_HOST=10.163.134.151' $SPARK_HOME/conf/spark-env.sh

sed -i '$ a\SPARK_MASTER_WEBUI_PORT=8085' $SPARK_HOME/conf/spark-env.sh

sed -i '$ a\SPARK_MASTER_WEBUI_PORT=8085' $SPARK_HOME/conf/spark-second-env.sh

sed -i '$ a\SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=10.163.134.152:2181 -Dspark.deploy.zookeeper.dir=/spark"' $SPARK_HOME/conf/spark-env.sh

sed -i '$ a\SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=10.163.134.152:2181 -Dspark.deploy.zookeeper.dir=/spark"' $SPARK_HOME/conf/spark-second-env.sh

cp $SPARK_HOME/conf/slaves.template $SPARK_HOME/conf/slaves

sed -i '$ a\10.163.134.151\n10.163.134.152\n10.163.134.153' $SPARK_HOME/conf/slaves

echo " Stop anything that is running"

$SPARK_HOME/sbin/stop-all.sh

sleep 2

echo " Start Master"

$SPARK_HOME/sbin/start-master.sh

# Pause

sleep 10

echo "start stand by Master"

scp $SPARK_HOME/conf/spark-second-env.sh dhanuka@10.163.134.153:$SPARK_HOME/conf/

ssh dhanuka@10.163.134.153 'cp $SPARK_HOME/conf/spark-second-env.sh $SPARK_HOME/conf/spark-env.sh'

ssh dhanuka@10.163.134.153 'sed -i "$ a\SPARK_MASTER_HOST=10.163.134.153" $SPARK_HOME/conf/spark-env.sh'

scp ha.conf dhanuka@10.163.134.153:/home/dhanuka/

ssh dhanuka@10.163.134.153 '$SPARK_HOME/sbin/start-master.sh --host 10.163.134.153'

sleep 5

echo " Start Workers"

#SPARK_SSH_FOREGROUND=true $SPARK_HOME/sbin/start-slaves.sh

SPARK_SSH_FOREGROUND=true $SPARK_HOME/sbin/start-slaves.sh spark://10.163.134.151:7077,10.163.134.153:7077

Run Bootstrap Script & Validate

1. $ sh setup.sh

Stop anything that is running

10.163.134.151: no org.apache.spark.deploy.worker.Worker to stop

10.163.134.152: no org.apache.spark.deploy.worker.Worker to stop

10.163.134.153: no org.apache.spark.deploy.worker.Worker to stop

no org.apache.spark.deploy.master.Master to stop

Start Master

starting org.apache.spark.deploy.master.Master, logging to /home/dhanuka/spark-2.2.1-bin-hadoop2.7/logs/spark-dhanuka-org.apache.spark.deploy.master.Master-1-bo3uxgmpxxxxnn.out

start stand by Master

spark-second-env.sh 100% 3943 12.6MB/s 00:00

ha.conf 100% 117 496.8KB/s 00:00

starting org.apache.spark.deploy.master.Master, logging to /home/dhanuka/spark-2.2.1-bin-hadoop2.7/logs/spark-dhanuka-org.apache.spark.deploy.master.Master-1-bo3uxgmpxxxxnn.out

Start Workers

10.163.134.151: starting org.apache.spark.deploy.worker.Worker, logging to /home/dhanuka/spark-2.2.1-bin-hadoop2.7/logs/spark-dhanuka-org.apache.spark.deploy.worker.Worker-1-bo3uxgmpxxxxnn.out

10.163.134.152: starting org.apache.spark.deploy.worker.Worker, logging to /home/dhanuka/spark-2.2.1-bin-hadoop2.7/logs/spark-dhanuka-org.apache.spark.deploy.worker.Worker-1-bo3uxgmpxxxxnn.out

10.163.134.153: starting org.apache.spark.deploy.worker.Worker, logging to /home/dhanuka/spark-2.2.1-bin-hadoop2.7/logs/spark-dhanuka-org.apache.spark.deploy.worker.Worker-1-bo3uxgmpxxxxnn.out

2. Validate Master node and worker node in 151

$ ps -ef | grep spark

dhanuka 2178 1 8 23:20 pts/0 00:00:03 /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.151-1.b12.el7_4.x86_64/jre/bin/java -cp /home/dhanuka/spark-2.2.1-bin-hadoop2.7/conf/:/home/dhanuka/spark-2.2.1-bin-hadoop2.7/jars/* -Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=10.163.134.152:2181 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.master.Master --host 10.163.134.151 --port 7077 --webui-port 8085

dhanuka 2289 1 22 23:20 ? 00:00:03 /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.151-1.b12.el7_4.x86_64/jre/bin/java -cp /home/dhanuka/spark-2.2.1-bin-hadoop2.7/conf/:/home/dhanuka/spark-2.2.1-bin-hadoop2.7/jars/* -Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=10.163.134.152:2181 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://10.163.134.151:7077

dhanuka 2347 30581 0 23:21 pts/0 00:00:00 grep --color=auto spark

3. Validate Standby Master and worker which reside in 153

$ ps -ef | grep spark

dhanuka 4738 1 5 23:19 ? 00:00:02 /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.151-1.b12.el7_4.x86_64/jre/bin/java -cp /home/dhanuka/spark-2.2.1-bin-hadoop2.7/conf/:/home/dhanuka/spark-2.2.1-bin-hadoop2.7/jars/* -Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=10.163.134.152:2181 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.master.Master --host 10.163.134.153 --port 7077 --webui-port 8085 --host 10.163.134.153

dhanuka 4829 1 6 23:20 ? 00:00:02 /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.151-1.b12.el7_4.x86_64/jre/bin/java -cp /home/dhanuka/spark-2.2.1-bin-hadoop2.7/conf/:/home/dhanuka/spark-2.2.1-bin-hadoop2.7/jars/* -Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=10.163.134.152:2181 -Dspark.deploy.zookeeper.dir=/spark -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://10.163.134.151:7077

dhanuka 4881 2354 0 23:20 pts/0 00:00:00 grep --color=auto spark

4. Spark Admin Panels

Lead Master (Primary)

Stand-by Master (Secondary)

Verify Spark Master fault tolerance support.

1. Let's kill the primary master.

$ kill -9 2178

2. Within 1-2 minutes time, you can see that stand by master lead the workers.

References:

[1] http://spark.apache.org/docs/latest/spark-standalone.html#cluster-launch-scripts

[2] https://dzone.com/articles/spark-and-zookeeper-fault

[3] https://mapr.com/support/s/article/How-to-enable-High-Availability-on-Spark-with-Zookeeper?language=en_US

[4] https://jaceklaskowski.gitbooks.io/mastering-apache-spark/content/exercises/spark-exercise-standalone-master-ha.html

4 comments:

Nice and good article. It is very useful for me to learn and understand easily. Thanks for sharing your valuable information and time. Please keep updatingHadoop Admin Online Training Bangalore

Awesome blog with great piece of information! It's always nice to read fresh contents. Great work. Keep sharing more.

Spark Training in Chennai

Spark Training Academy Chennai

Oracle Training in Chennai

Oracle Training institute in chennai

VMware Training in Chennai

VMware Course in Chennai

Spark Training in Velachery

Spark Training in Tambaram

From where does this ha.conf file comes from? Haven't been mentioned before, until scp line in the setup script.

Post a Comment